Understanding the technical aspects of website crawlability is essential in the detailed domain of search engine optimization (SEO) in order to attain maximum visibility and ranking in search results. The robots.txt file, a straightforward but effective tool that directs search engine robots across your website, is an essential part of this approach.

What is Robots.txt?

A text file found within the root directory of a website called robots.txt, also called a robots exclusion protocol, instructs search engine robots as to which pages or areas of the site should or should not be crawled. By serving as a channel for information, this file lets search engines know how you would like your content to be indexed and displayed on your website.

Why is Robots.txt Important for SEO?

Because it gives you control over what content search engines can access and index, this file is essential to SEO. There are several reasons why this control is required.

Preventing Indexing of Low-Quality or Duplicate Content:

You can stop search engines from indexing and presenting low-quality, duplicate, or irrelevant content in search results by restricting access to those pages. This keeps searchers from becoming confused by irrelevant or duplicate results and enhances the overall quality of the content on your website.

Protecting Sensitive Information:

it can be used to restrict access to private and sensitive data on your website by blocking access to sections such as administration areas, login pages, and personal data. This will stop unwanted access and ensure privacy and security.

Managing Crawl Budget:

The resources available to search engines to crawl web pages are limited. You can assist search engines in paying attention to their crawling efforts on the most significant and pertinent pages on your website by blocking low-priority or unimportant pages.

Understanding Robots.txt Syntax

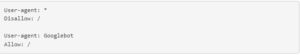

Simple directives are used in the robots.txt file to define which user agents (search engine robots) are allowed or banned from accessing specific URLs or directories. Below is a summary of the fundamental instructions:

User-agent:

Which user-agents are covered by the following rules is indicated by this directive. For Googlebot, Bingbot, and other search engine robots, for instance, you can set different rules.

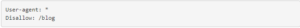

Disallow:

This directive says that the specified URL or directory should not be crawled by the specified user agent. An example of this would be to add “Disallow: /wp-admin/” to stop search engines from indexing the blog and all its posts, your file might look like this.

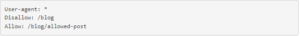

Allow:

This directive is the exact opposite of Disallow, allowing the specified user-agent to crawl the specified URL or directory explicitly. You could have your robots.txt file look something like this if you wanted to stop search engines from going through all of your blog posts save for one:

Creating an Effective Robots.txt File

A good robots.txt file strikes a balance between enabling search engines to find your useful content and preventing them from crawling irrelevant or unimportant pages. Here are a few guidelines for making an organized robots.txt file:

Keep it Simple: Skip complex or ambiguous rules by using clear and concise directives.

Block Unnecessary Pages: Block pages that are low-quality, duplicate, or irrelevant to the main content of your website.

Allow Important Pages: Ensure that crawling and indexing are permitted for all significant and pertinent pages.

Use Sitemap: Make and submit a sitemap to direct search engines to the pages you want them to index.

Test and Monitor: Use robots.txt testing tools to ensure that your rules are working properly. As your website evolves, review and update your robots.txt file on a regular basis.

Common Robots.txt Mistakes

Stay out of these typical errors when using robots txt file:

Blocking Essential Pages: When significant pages are unintentionally blocked, your website’s SEO may suffer.

Over-blocking Content: Blocking too much content can make it more difficult for search engines to fully understand the organization and relevancy of your website.

Ignoring Robots.txt: A robots.txt file that is highly complex or confusing could be ignored by search engines.

Conclusion

An essential tool to manage website crawlability and enhance SEO performance is robots.txt. You can efficiently direct search engines to the most important content on your website by knowing its goal, syntax, and best practices. This will guarantee that your website is indexed and ranked correctly in search results.

Frequently Asked Questions!

Q. What happens if I don’t have a robots.txt file?

Ans: Search engine robots will assume they are free to crawl every page on your website if you don’t have a robots dots txt file. This could result in irrelevant or low-quality pages being indexed, which would hurt your SEO.

Q. How can I test my robots.txt file?

Ans: To test your robots dot txt file, you can use a number of online tools. The Robots.txt Tester tool from Google is one well-liked preference.

Q. How often should I update my robots.txt file?

Ans: Every time you make a major revision to the content or structure of your website, you should update your robots dots txt file. By doing this, you can make sure search engines are always aware of your preferred methods of crawling.

Q. What is the difference between robots.txt and meta robots?

Ans: While meta robots is an HTML tag that is placed on individual pages, robots.txt is a file that is located in the root directory of your website. Although they function at different levels, crawling and indexing can be managed using both robots dot txt and meta robots. While meta robots are applicable to individual pages, robots txt is applicable to the entire website.

Q. What are some best practices for using robots.txt?

Ans: The following are some guidelines for using robots.txt:

- Keep it simple and easy to understand

- Block unnecessary pages

- Allow important pages

- Use a sitemap to guide search engines

- Test and monitor your file regularly

Explore our in-depth guide on SEO and content marketing for a deeper understanding of how to effectively combine these two marketing strategies. You’ll find insightful information and useful advice on how to improve your website’s visibility and attract frequent visitors.